The use of artificial intelligence has exploded in recent years, yet despite its many benefits, not everyone is satisfied with its methods.

One of those people is Eric Slyman.

Slyman is a third-year computer science doctoral student at Oregon State University who led the recent development of FairDeDup.

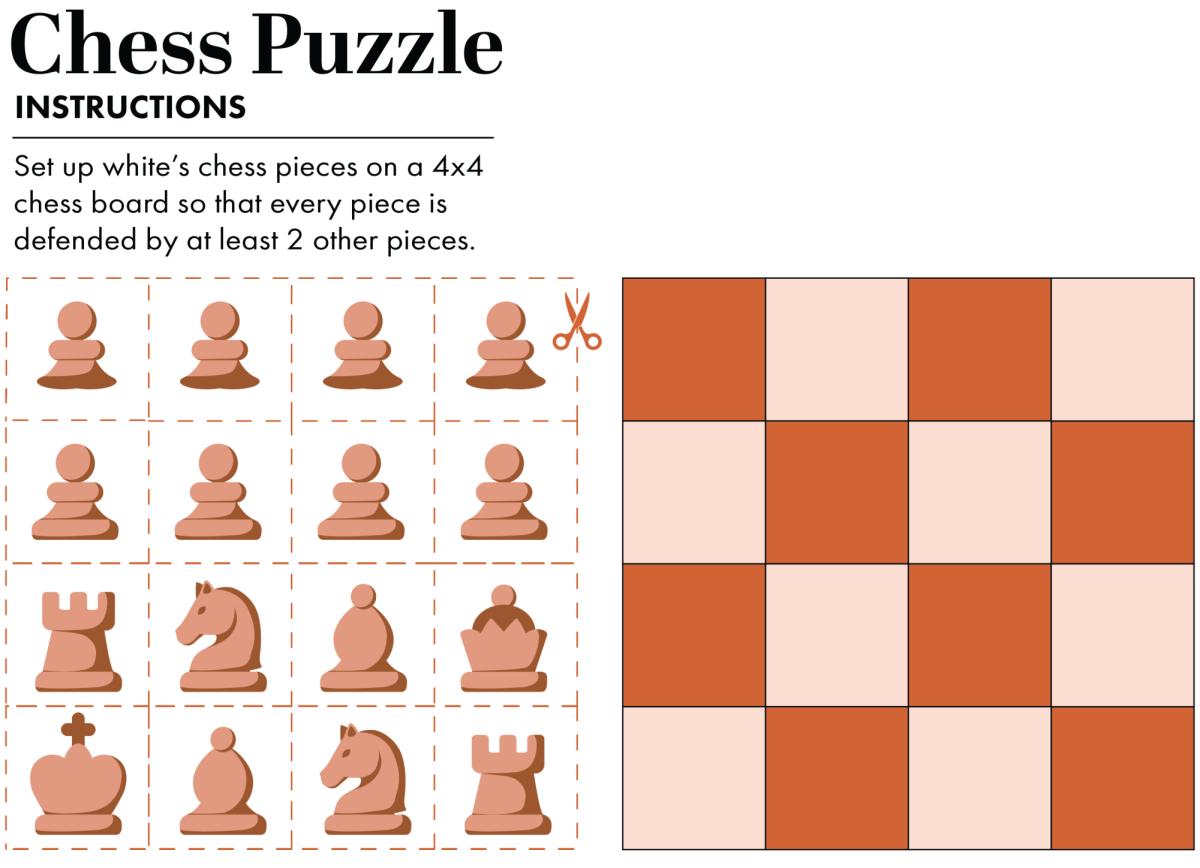

FairDeDup, which stands for Fair Deduplication, is a recently developed algorithm in computer vision which seeks to assist in creating more accurate and fair representations of information about people and groups.

The algorithm is built off of a previous method called SemDeUp, Semantic Deduplication, which aims to remove repetitive data from very large data sets.

“This is something we call vision and language AI; AI that can see and speak about the world,” Slyman said. “You can think about this like the kind of AI that, if you were to take a photo, can write a caption for you, or could answer questions about what you see.”

Specifically, Slyman’s method is applied to images and captions in a process called pruning, whereby AI takes a large data set of photos or captions — typically millions or billions — and formulates a collection of images that represents the whole set of data.

The problems in fairness arise when someone, for example, searches for images of doctors, and they are met with results that overrepresent one group or ethnicity of people. Academic work in the field of AI has intensified in the last 10 years, and Slyman called previous models unfair.

“2017 is I think when some companies started to have the old classification models actually out in the wild,” Slyman said.

These old classification models conduct face classification and predict recidivism, which is when convicted criminals reoffend.

“We started to see papers that say things, in fields like recidivism prediction, that people of color are more likely to commit a crime than you are, if you’re a white woman,” Slyman said.

These models, however, do not always accurately reflect reality.

“You’re just sticking a number by people’s skin color. And with face classification, critics of the (image filtering) AIs were saying that people who are underrepresented are not picked up by your face recognition algorithm, or are getting classified as really nasty things,” Slyman said.

Slyman began publishing in the field of artificial intelligence in 2023.

In FairDeDup, Slyman and his team of researchers aim to mitigate the negative influence of AI. Such problems arise when people are given biased search results, such as overrepresentation, leading them to form biased opinions about underrepresented groups.

“There are more subtle cues that you might see, such as your female doctors looking a little more like nurses, for one reason or another…that could still perpetuate negative thoughts or stereotypes or less than optimal attitudes towards people from underrepresented groups to succeed,” Slyman said.

So how does FairDeDup make searches more fair?

“What my team does is change that heuristic, that little decision making method that we use for which images to keep and which ones to remove. … that doesn’t actually affect the accuracy of the model. And so we say…‘we want you to select data points equally across race, gender, ethnicity, just by telling it in natural language that that’s what we want.’” Slyman said.

As for the real-world applications of FairDeDup, Slyman said that Big Tech is the primary arena.

“This is the kind of thing that would mainly be used by large scale industry practitioners… like Meta, Google, Apple and Adobe,” Slyman said.

Tech companies could then integrate this process into their model development cycle. Before an AI model like ChatGPT is finalized for sale or use, multiple models are tested in an attempt to estimate their efficacy.

“This enables people to do that iterative development process in a way that’s more accurate to real life,” Slyman said.

![Newspaper clipping from February 25, 1970 in the Daily Barometer showing an article written by Bob Allen, past Barometer Editor. This article was written to spotlight both the student body’s lack of participation with student government at the time in conjunction with their class representatives response. [It’s important to note ASOSU was not structured identically to today’s standards, likely having a president on behalf of each class work together as one entity as opposed to one president representing all classes.]](https://dailybaro.orangemedianetwork.com/wp-content/uploads/2025/03/Screenshot-2025-03-12-1.00.42-PM-e1741811160853.png)